Chapter 4 of the fast.ai book takes you on the journey under the hood. This chapter is about laying the foundation and might not be easy to follow at the first attempt. When I finished chapter 4 for the first time, I realized, I need to slow down and take the time to understand all the building blocks. It is also mentioned in the book that this is one of the hardest chapters.

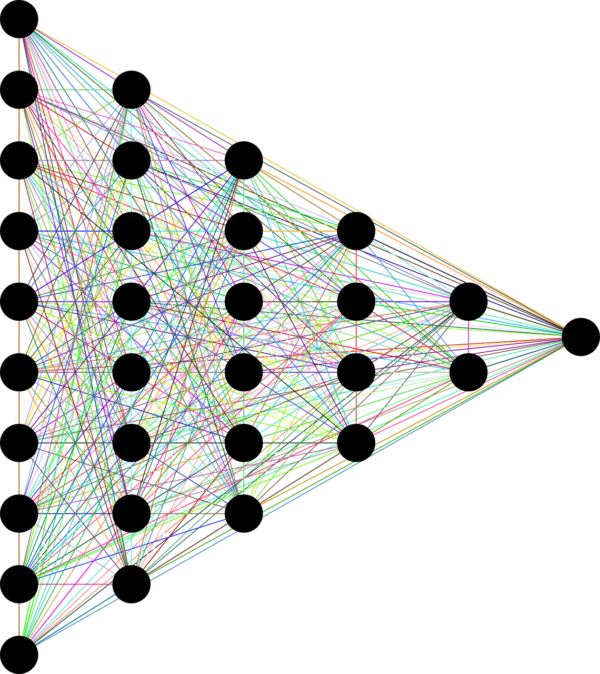

The most important concepts you learn in this chapter are the roles of arrays and tensors. Also, you will learn about stochastic gradient descent (SGD) and the idea of a loss function.

How to prepare

I suggest doing some quick tutorials on how to work with arrays and tensors in deep learning if you are not already familiar with it. Jeremy does an excellent job in explaining all the concepts. However, for a visual person like me, I would learn better if I saw some illustrations about how tensors hold data.

For SGD and loss function, I went back to Andrew Ng’s machine learning course (Week 1 is sufficient) and reviewed gradient descent, calculus, linear algebra, and cost function (different name for loss function).

Andrew Ng explains the concepts with examples and illustrations, and it works great for me. However, I still like the top to bottom learning approach that Jeremy is using in his teaching.

After reviewing these concepts, I went back to chapter 4 and listened to the lessons. While listening, I did all the steps on a notebook from scratch. When you write the code yourself, you get to make mistakes, get to know how different methods and functions work.

Few important learning

- PyTorch tensors are like NumPy arrays, with the difference that tensors can utilize GPU

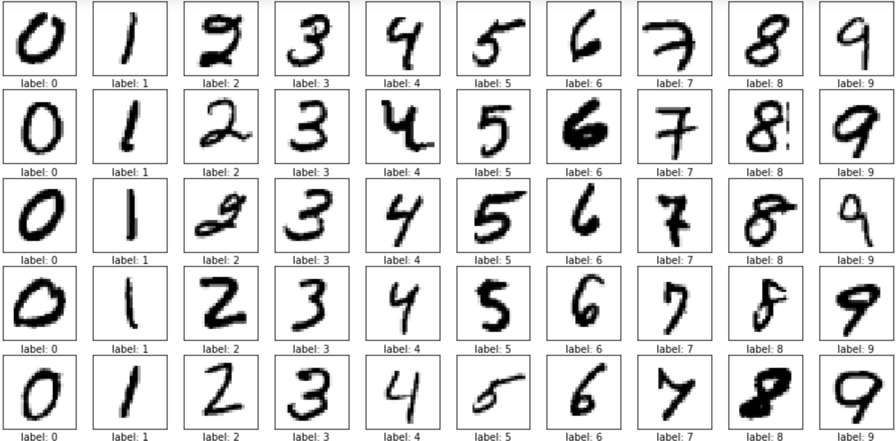

- In the MNIST problem, we needed a better loss function to update the predictions with slight changes to parameters. If the loss function is not sensitive to small changes in the parameters, we will get zero derivative which will not change the weights in the next step and no change in predictions.

- Stochastic gradient descent is used when we are using a batch of the dataset to update the parameters. Gradient descent (GD) is used when we do the training on the whole dataset. Since the whole dataset can be big and taking a long time to run, we usually use the SGD.

- To get a better intuition of the code and methods, I started checking the docs and examples. It really helps. (Examples: Tensor.view() and Torch.unsqueeze())

Dive Deeper

It is highly recommended to answer the questions at the end of the chapter. It is an excellent way to review the material and test your learning. This time, I challenged myself with the “Further Research” section as well. In the second item, you are asked to complete all the steps using the full MNIST dataset. It is like a personal project which helps me think about how to solve this problem.